2025 on Goodreads by Various

My rating: 4 of 5 stars

2025 was a year of upheaval for me. Virtually everything changed: my job, my relationship, and even my country. Strangely, this has been true for many of the people I know (my theory is that the stability we cobbled together during the pandemic is finally unwinding). In any case, this didn’t leave as much room for reading, which is a pity. Even so, the books I did read provided comfort and guidance in these strange times, for which I’m grateful.

New York City was a major topic of my reading. I kicked off the year with The Works, an excellent book about how the city gets its electricity and water, how it gets rid of its garbage, how it controls traffic and moves its citizens. Even more revelatory was Times Square Red, Times Square Blue, by Samuel R. Delaney, which explores the ways that cities promote or discourage genuine human contact. Ottessa Moshfegh’s superlative novel, My Year of Rest and Relaxation, manages to shed just as much light on what it is like to live in this strange place.

Apart from this, my reading was kind of a mixed bag. Esther Perel’s two major books on long-term relationships were extremely interesting for her wide and somewhat unconventional perspective. Vicky Hayward’s translation of an 18th century Spanish cookbook managed to be one of the most fascinating works of history that I’ve encountered in a long while. David Grann’s books—on the Osage murders and Percy Fawcett’s quest to discover the Lost City of Z—were both thrilling; and I continued my slow exploration of Murakami’s fiction.

But the most significant event of my year in books was the publication of my novella, Don Bigote. Thanks to the editors at Ybernia, Enda and María, I even had my first book event, and got to talk about my writing in public for the first time in my life. To top it off, I contributed two chapters to a book about living in Madrid, Stray Cats—ironically, just in time to decide to move away from that lovely city. In a year in which I often felt low and lost, these accomplishments helped to get me through.

Yet perhaps my favorite moment was being able to meet and interview Warwick Wise, whose writing I greatly admire, and whom I met through Goodreads. Even after all these years, then, this site continues to enrich my life.

View all my reviews

Tag: Review

Review: Persians, by Lloyd Llewellyn-Jones

Persians: The Age of the Great Kings by Lloyd Llewellyn-Jones

My rating: 3 of 5 stars

This book begins with a great promise: to correct the distorted view that so many of us have of the Persian Empire. This distortion comes from two quite different directions.

In the West, our view of the Persian Empire has largely been filtered through Greek sources, Herodotus above all. This is nearly unavoidable, as the Greeks wrote long and engaging narrative histories of these times, while the Persians—although literate—did not leave anything remotely comparable. Yet the Greeks were sworn enemies of the Persians, and thus their picture of this empire is hugely distorted. Taking them at their word would be like writing a history of the U.S.S.R. purely from depictions in American news media.

The other source of bias is from within Iran itself. Starting with Ferdowsi, who depicts the Persian kings as a kind of mythological origin of the Persian people, the ruins of this great empire have been used to contrast native Persian culture from the language, religion, and traditions imported by the Muslim conquest. In more recent times, Cyrus the Great has become a symbol of the lost monarchy, a kind of secular saint—a tolerant ruler, who even originated the idea of human rights. This purely fictitious view is, at bottom, a kind of protest against the current oppressive theocracy.

But this book does not live up to its promise. To give the author credit, however, I should note that the middle section of the book—on the culture, bureaucracy, and daily life of the empire—is quite strong. Here, one feels that Llewellyn-Jones is relying on archaeological evidence and is escaping from the old stereotypes. The epilogue is also a worthwhile read, detailing the ways that subsequent generations have used (and abused) the history of this ancient power.

Yet the book falters in the chapters of narrative history. Here, Llewellyn-Jones is forced to rely on the Greek sources, and as a result many sections feel like weak retellings of Herodotus, with a bit of added historical context. Even worse, there are several parts in which I think he is not nearly skeptical enough regarding the stories in these Greek authors. At one point, for example, he retells the story of Xerxes’s passionate love affair with the princess Artaynte—a story taken straight out of Herodotus, and which has all of the hallmarks of a legend. That Llewellyn-Jones decides to treat this story as a fact, and does not even gesture towards its source, is I think an odd display of credulity in a professional historian.

The irony is that the final section of the book—full of scandalous tales taken out of Greek authors, depicting the decadence and depravity of the Persian court—only reinforces the very stereotypes that Llewellyn-Jones sets out to destroy. The really odd thing, in my opinion, is that there are no footnotes or even a section on his sources, so the reader must take him at his word—or not. I suspect this omission is to cover up the embarrassing fact that he relied so heavily on Herodotus.

This is a shame, as the Persian Empire does deserve the kind of reevaluation he proposes. It is fascinating on its own terms, and not just as a foil to the noble Greek freedom-fighters. Still, I think this book is a decent starting point for anyone interested in the subject. One must only read it with a skeptical eye.

View all my reviews

Collision 2025: The Joy of Losing

I got off the bus into a bright August day, climbed the stairs to the Meadowlands Exposition Center, and then gave my ID to a young man at the door. He handed me a lanyard that read: “COMPETITOR.” For the first time since high school, I was going to compete in a video game tournament. And it was a big one: Collision 2025.

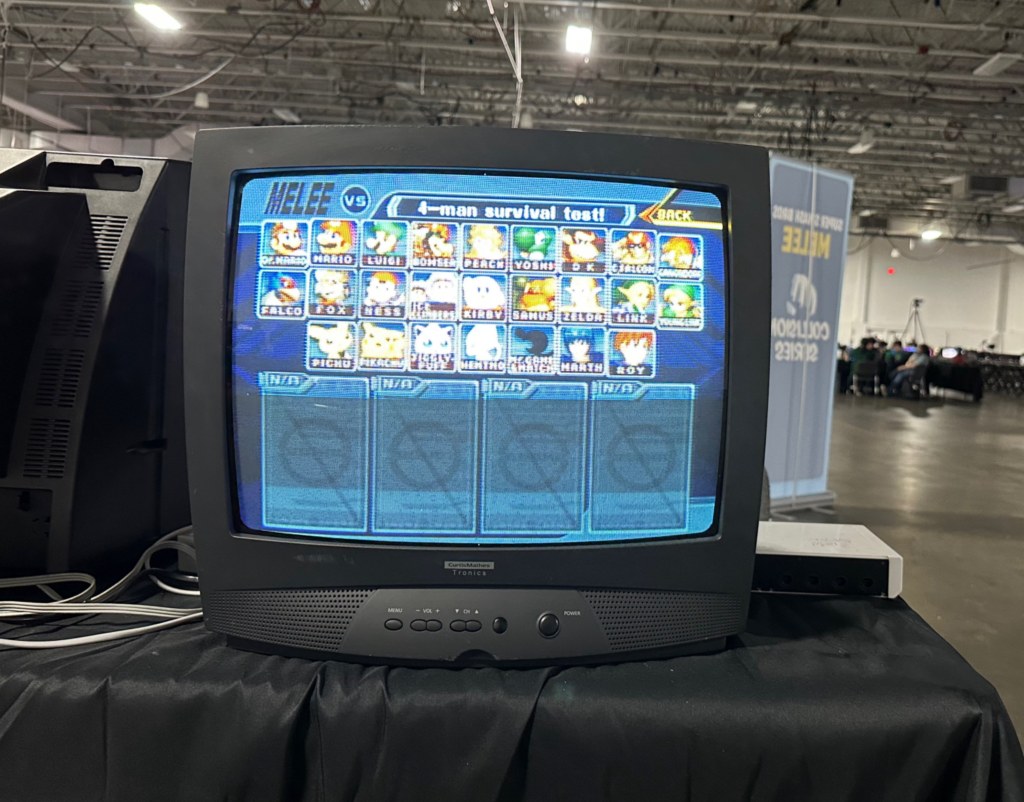

The space was huge, gray, and bare. On both sides of the cavernous room, rows and rows of monitors and consoles were set up, ready to be played. In the back was the main stage, where the final matches would be projected onto a big screen. By the time I arrived, early on the first day, relatively few people were about. The main events wouldn’t begin until the following day.

I walked around and tried to get my bearings. Nothing much was happening. A few dozen people were playing so-called “friendly” matches (not competitively), while others were simply milling about. Yet there was a quiet intensity to the space. Though the event was dedicated to video games in which cartoon characters beat each other up, it was clear that the attendees were not here to have fun. They were here to play, and to win.

Suddenly, a strange panic began to take hold. It was a feeling that I hadn’t had since I was a teenager: the paralyzing fear of being badly beaten at a video game. From the first moment, I could tell that I was simply not at the same level as even the average players here; and I felt sure that, if they saw me play, I would be laughed at. I felt ridiculous: a man at the age of 34, having lived in a foreign country, published several books, and worked for years as a teacher—paralyzed with fear at the thought of losing a video game. But the anxiety was real.

Indeed, it was so real that I had to leave the venue. Breathing heavily, I sat at a bus stop and even contemplated leaving, despite having paid a significant amount to sign up. But that would be cowardly, I decided. So I walked to a nearby diner, sat down, and ate an omelette. Then, still rattled, I walked to the Mill Creek Marsh Trail, a park that consists of a path through restored wetland. There, I took a deep breath, put on my headphones, and focused: “It’s just a freaking video game,” I told myself: “I can do this.”

I should give you some background. Super Smash Bro. Melee, released in 2001 for the GameCube, is the second iteration of one of Nintendo’s most popular games. Despite its age, the game is still played avidly: its fast and complex mechanics make it the most competitive version of the franchise.

As I’ve written about elsewhere, this game was a big part of my teenage years. I played it with friends and neighbors, and even brought it to school to play during breaks. And after one of my college suite mates brought his old GameCube to our dorm, I once again went through a heavy phase of Melee. But I hadn’t touched a controller since 2013, when I graduated college.

Despite this, I maintained an active interest in the game. Though I’ve never been a sports fan, I somehow became a fan of competitive Melee. Every so often, I would lose myself down a YouTube black hole, watching game after game after game. And this, despite fancying myself too enlightened and cultured for video games. For a long time, it was a secret vice. And despite my shame, I dreamed of someday playing it again.

Then, during my Christmas break, 2024, something fateful happened: my brother discovered our old GameCube in the basement of my mom’s house. Inside was Super Smash Bro. Melee. It even had our old memory card. For the first time in over a decade, I could play my favorite game. It was heaven: surrounded by all of my old friends, playing the exact game we used to play, rediscovering the pure joy of being kids. I enjoyed it so much, in fact, that I decided to take the GameCube with me back to Spain.

Eight months of practice later—most of it on my own, against the computer—I decided that it was time that I take my first real step into the world of Melee. My brother sent me the link to Collision 2025, a large tournament set to take place in New Jersey (indeed, so many important players would attend that it was classed a “supermajor”), and I decided that I had to sign up, even though I had no chance of even making it past the first round.

So here I was. I was still so nervous that I decided that I couldn’t allow myself to hesitate again. Thus, I walked back into the expo center, plugged in my controller into a free console, and started to warm up against a computer. After just a minute, however, somebody sat down next to me. “Wanna play?” he asked.

Thus began my own friendlies. As I expected, I was far worse than virtually everyone in attendance, and our matches mainly consisted of me being tossed around like a rag doll. Still, it was a fascinating experience—to finally see for myself how I measured against serious players. It was like playing against a professional tennis player after practicing in the park with friends: we were hardly playing the same game.

Yet much to my relief, everyone I spoke to was welcoming and kind, even as they whooped me. And I learned much about the game which I would never have learned by simply following it online.

For example, I discovered that controllers are an entire world unto themselves. Throughout the venue, there were several stands selling tricked-out controllers—for hundreds of dollars—or offering customization services, swapping out buttons and joysticks. And here I was, with an original, unmodified GameCube controller from 2001.

One player I played, JKJ (everyone goes by a “gamer tag” at these events; mine is Royboy), gave me a close-up look at his controller: it had fabric wrapped around the handles, and extra notches around the joystick. These notches are a controversial topic, as it makes it easier for players to angle their joysticks more precisely. JKJ explained that he thought notching was unsportsmanlike, but he started doing it because the practice is so widespread.

Another player I met—whose gamer tag I can’t recall, but whose real name was John—played with a different kind of controller entirely. It was a kind of black rectangle, the size of a traditional keyboard, but with much larger buttons. This is the B0XX Controller, which was developed by the smasher Hax$ (who died under tragic circumstances) for those suffering from joint and wrist problems.

“Are you squeamish?” he asked, after I inquired about the controller. I said “No,” and he showed me a video taken by his surgeon: his wrist was sliced open, and a gloved hand was manipulating his arm to show the tendons, muscles, and nerves. John explained that he was practicing on a traditional controller (“frame-perfect ledgedashes,” if you want to know the technique) when he felt something pop in his wrist. Apparently, a tendon had snapped out of its sheath, causing severe pain.

Now, after surgery, he has a scar and uses this ergonomic controller. Just because it is a video game doesn’t mean that you won’t get an injury.

I spent several hours playing and socializing before deciding to call it a day. The next day, Saturday, was when the real tournament began. At least I was warmed up.

For me, Melee is pure nostalgia. But the next day brought even more nostalgia, in the form of Jackie Li. Jackie grew up with me and my brother. He was over at our house so often that he might as well have been a third sibling. We would spend hours each day together. My mom would give him rides to school in the morning. He knew everyone in my family—even coming up to see my grandparents in the Catskills.

Like many kids, our main activity together was video games. We played them obsessively as kids. And Jackie was always the best. Nowhere was this more true than with Melee. Although I took the game very seriously, spending hours honing my technical skills, and although I could beat everyone else, often quite easily, I could never beat Jackie. He would win nine out of ten games, and no amount of practice ever seemed to bridge that gap.

My failure to beat him even followed me to college, as we both attended Stony Brook University. My suite mate got a GameCube, and I commenced to beat everyone who sat down to play me. But when Jackie came over, I still couldn’t win.

But all of us drifted apart after college. Partly it’s because I stopped playing video games, and partly it was simple neglect, and partly it was my move to Spain. But the impending tournament jogged my memory, and I decided that I had to reach out to Jackie. More than a decade had gone by, and I didn’t even have his phone number. So I sent him a message through LinkedIn. He agreed, and on Saturday morning he met me and my brother in Port Authority, looking just as he looked the last time I’d seen him.

Seeing somebody from your past after so long is almost trippy. It is a disorienting mixture of familiarity and strangeness. You know one another intimately, and yet hardly know one another at all. But it did make one thing clear to me: Our memories are stored in other people.

As I spoke to Jackie, huge swaths of my childhood came flooding back—nothing terribly important, but lots of little things, things that I hadn’t thought about in a long time.

So much of what we think of as “maturing” involves forgetting—leaving behind old identities that no longer serve us. There is definitely a power in this, the power of reinvention. But there is also a kind of spiritual danger, I think, since we can forget important aspects of ourselves.

Becoming an adult, I’ve found, eventually means integrating some of the identities we left behind, at least to some degree. And though it’s silly, I think my own changing attitude towards video games illustrates this process. When I was younger, video games were pure fun—absolutely engrossing, an escape from life, a competitive thrill. But at a certain point I forswore them: I decided that they were for losers, a huge waste of time, and brought out the ugly competitive side of players.

This was useful to me, since it allowed me to focus on socializing, on music, on school, and so on. But this left a huge part of my childhood as a black hole, a write-off. Now that I’m older, I’ve come to see video games as a qualified good. They are, after all, just a type of game; and our love of playing and creating games is one of our species’s most distinguishing qualities. Of course, their nature makes them more addictive than, say, Parcheesi. But nobody who has witnessed football culture in Europe, or even the steel-willed dedication of an avid chess player, can argue that video games are uniquely bad in this respect.

Indeed, crazy as it sounds, I am now inclined to say that Super Smash Bros. Melee must be one of the greatest games ever made. And now I was ready to compete.

The three of us entered the venue and sat down to play. Jackie was going to help me warm up before the tournament. This was like being in a dream. Here I was, over a decade later, ready to play my arch-rival—the one who I learned with, the one who always beat me. All these years later, what would the result be?

Strangely, anticlimatically, the result was nearly the same as it had always been: He still had a serious edge on me, beating me in about 90% of our matches. (It turns out that he was also practicing occasionally over the years.) And this brings me to another fascinating aspect of this game, and of games in general: the reality of skill.

Now, if you don’t know much about Melee, the game could seem to involve a great deal of luck. After all, players are making split-second decisions in situations that, chances are, they had never seen before—at least not in that precise configuration. The same is true of many games and sports: to the untrained eye, they seem to be up to chance as much as skill. But the reality is that skill is something as real and tangible as a controller: it is measurable and tends to be fairly consistent through time.

The results of the tournament speak for themselves: the first, second, and third place finishers (Zain, Hungrybox, and Joshman, respectively) were the first, second, and third place seeds. In other words, the results were exactly what was anticipated based on the player’s rankings.

This is not to say that there weren’t “upsets.” The 4th-place finished, lloD (a practicing doctor, as it happens), was seeded 13th; and the 7th-place finisher, Zamu, was seeded 23rd. Clearly, a player’s official rank isn’t a perfect reflection of their skill at a given moment (and we should be grateful for that, as the game would be boring to watch otherwise). But what strikes me as more notable is that even these “upsets” weren’t radical miscalculations. It is not as if a player came out of nowhere to finish in the top 8.

Another striking feature of skill is how it can appear to follow an exponential scale. I will illustrate this point with my own tournament experience. My first-round opponent was a player who went by “notsmoke.” He beat me handily, winning every game by a significant margin. The set was over in less than 10 minutes. But as soon as I congratulated my opponent, his second-round pick sat down for the match. This was Agent, a ranked player, who proceeded to beat notsmoke nearly as badly as I’d just been beaten. Agent was, in turn, knocked out handily by Zuppy, who in turn was eliminated without much ado by Moky. And on and on.

The striking thing about all this is that, to me, the skill levels of (say) Agent, Zuppy, and Moky appear to be nearly indistinguishable, and yet there are significant chasms between each of them. And the gap between Moky and Zain (the winner of the tournament) is just as significant still.

To round out my own tournament experience, I had one more match to play before I was knocked out entirely. My new opponent was ENFP, who beat me even more dramatically than notsmoke (though rather politely, I should add). And that was it for my stint as a competitive gamer.

The rest of our time at the Meadowlands Exposition Center was just spent watching. And here I want to add a note on the demographics. As you might imagine, the large majority of the attendees were male (stereotypes do sometimes hold true). But I was surprised, and charmed, by the significant number of trans people. When I was a kid, video game culture was quite misogynistic and homophobic (and perhaps corners of it still are); but now, players are introduced along with their pronouns. Most everyone was in their 20s and 30s, though some were considerably younger. For example, one up-and-coming player, OG Kid, is (as his name suggests) still a teenager—meaning that he is younger than the game itself.

One jarring aspect of the tournament was seeing so many famous players. Virtually all of the major competitors were in attendance, with the notable absence of Wizzrobe (who dropped out last minute), Cody Schwab (the current #1 player), and Mang0 (often considered the greatest player of all time, who had just been banned due to his drunken misbehavior at another event). Everywhere I looked, there were faces I recognized: Axe, Salt, Junebug, Aura, Nicki, Aklo, Jmook, Trif… I was starstruck, but then realized that none of these people is, in truth, really famous. They were legends only in the context of a fighting game that is over two decades old.

Floating among the crowd, his hair died blond, was Zain—the current #2 player and the heavy favorite to win. He was surrounded by people who knew exactly who he was, who admired and envied him; and yet he seemed to be separate from the crowd, just drifting from place to place. At one point, he stood next to me as I observed a match—the tournament’s eventual winner next to its last-place finisher. And it occurred to me that I had never stood so close to somebody so good at anything.

Zain stood there—alone in a mass of people dedicated to this game, people who had collectively devoted hundreds of thousands of hours to honing their own skills—secure in the knowledge that he could beat any one of them. And it made me reflect on why people devote themselves to such seemingly pointless activities.

Aside from fun (and the game is very fun), the most obvious answer is escapism: to focus your attention on something unrelated to your daily life, where there are no consequences. But that is not the whole story. For some, it is the community—being a part of like-minded people, the chance to make friends and share interests. And the game also offers an opportunity to forge a new identity— nerdiness transmogrified into coolness.

But for Zain, and those like him, I realized that they were here for something else: the pure pursuit of a skill—skill for the sake of itself, being the best they could be at this one specific task. There is something almost inhuman about it—requiring, as it does, such single-mindedness of purpose that everything else in life becomes secondary. And yet, in a way, this pursuit of skill for its own sake might be the most human quality of all—the quality that drives us to the highest reaches of excellence.

But there is a high price to pay. The irony is that, thanks to my reunion with Jackie, I probably enjoyed the tournament quite a bit more than its champion. And to enjoy things you’re bad at—to enjoy them, especially, with old friends—is also a very human quality.

Review: Stray Cats

Stray Cats: Life in Madrid Through 17 Voices by John Dapolito

My rating: 5 of 5 stars

I met John Dapolito at the Antón Martín metro stop on a cold autumn night. He was smoking a cigarette and scanning the crowd, and when he recognized me he told me to follow him to a nearby bar. I was nervous, as this was a kind of interview. He was looking for writers to contribute to a new volume, a collection of mini-memoirs of people who have moved to Madrid from elsewhere. He wanted them to answer three questions: How has Madrid changed since you moved here? How have you changed? And how has Madrid changed you?

“Nine years?” he said, mulling over my time in Madrid. “Nine years…” his voice trailing off. To many Americans in Madrid, this is quite a long time. But compared to John’s twenty-five, it seemed rather paltry. So we talked about how I could write my essay, what angle I could take, what I could emphasize about my experience to differentiate from everyone else’s. The next day, I started writing a draft of my essay long-hand, in a notebook—something I seldom do—and now it is a pleasure to see it in print in this collection.

Ironically, in the months since I sent off the final draft to John, I’ve grown to love Madrid more than ever. While I used to feel the need to escape into the sierra every couple of weeks, craving a bit of nature, lately I’ve been content to just stroll around the city, exploring its nooks and crannies, and getting ever-more integrated into its peculiar form of life. In short, now that my nine years are nearing ten, I am finally beginning to feel like a proper madrileño, fully at home in this great Spanish metropolis. And now that I have my story of Madrid in print, I feel now more than ever that I’ve really made a home here.

The stories in this volume have many common themes: learning the language, enjoying the nightlife, resenting the gentrification, and so on—themes that would have appeared had this book been written about Budapest or Bangkok. But beneath these superficial commonalities are what make the essays worth reading—insights into Madrid and, more often, into the person writing about it. And these essays are illustrated by black-and-white photos by the editor, John. I remember him opening a binder of them at the bar, during our first meeting, and admiring their atmosphere, how they really captured an aspect of the beauty of this city. And I thought to myself: “I want to be a part of this project.”

View all my reviews

Review: Times Square Red, Times Square Blue

Times Square Red, Times Square Blue by Samuel R. Delany

My rating: 5 of 5 stars

When I was an undergraduate, having rashly and unwisely switched my major from chemistry to anthropology, I met with my academic advisor. He asked me: What do I hope to learn as an anthropologist? To this, I gave the answer: I want to walk through Time Square and understand why it is the way it is. Yes, grandiose and pretentious, but it did capture something—the urge to figure out why the world is filled with so much soulless, commercial crap.

I am now suffering the financial consequences of studying anthropology, and not much closer to enlightenment. Thanks to this book, however, I do feel closer to understanding that mecca of American consumerism: Times Square.

This is a highly unusual book. Delany, who usually writes science fiction, set out to write a work of urban studies. And yet it is just as much a memoir as an academic analysis, and it comes to its point in a very roundabout way. Even so, it is easily among the best books about New York City I have ever read.

The book is divided into two essays, originally published independently. The first, “Times Square Blue,” recounts Delany’s experience of the old, seedy Times Square—the Times Square of peep shows, prostitutes, drugs, and sex shops. Specifically, it focuses on the porn theaters, places which became gay cruising grounds, despite showing almost exclusively straight porn. Delany spent decades visiting these theaters and paints a memorable portrait of this now unimaginable Times Square.

Yet this part of the book is not prurient. Delany doesn’t write to titillate the reader, or even to mourn a part of the city that has disappeared. He writes, instead, to illustrate an idea about what makes cities work. It is really an expansion of what Jane Jacobs said in her classic book on the subject: that cities need to foster contact between different sorts of people. Delany merely adds a sexual dimension to this analysis, and he shows how his own search for men threw him into contact with all sorts of people whom he would never have met through work or other socializing.

Part Two, “… Three, Two, One Contact: Times Square Red” expands this observation into a theory. Delany contrasts “contact”—the kind of random meeting of a stranger, such as in line at a grocery store—with “networking,” which is a more formalized way of meeting people, such as at a book convention. An important difference between the two is that, in the former, it is common to meet people of different backgrounds and socio-economic classes, while the latter usually restricted to members of the same class.

Delany asserts that much of the modern world is intentionally created to promote networking and to discourage contact. And the redevelopment of Times Square is a case in point. Whereas it was possible to go to the old Times Square and meet all sorts of people, in the Times Square as it exists today there are simply tourists and people trying to make money off of tourists. And very few people who visit Times Square now, I reckon, meet anyone at all.

There are further aspects of Delany’s analysis—much of it in a Marxist vein—but to me the pleasure of this book was simply in the love of city life that he exudes. On every page, the reader can feel that he simply enjoys meeting people of different sorts, and finds that it enriches his life. It is a wonderful antidote to the sometimes suffocating loneliness that big cities can engender—the feeling of being surrounded by people, and yet completely ignored. While reading this book on the metro, I suddenly became aware of everyone else on the train as individuals and not faceless mannequins. It made the ride far more pleasant.

View all my reviews

Review: The Ethical Slut

The Ethical Slut: A Guide to Infinite Sexual Possibilities by Dossie Easton

My rating: 4 of 5 stars

This is a good example of a book which I almost certainly would never have read had it not been for an excellent review on Goodreads. I refer to the one by Trevor, whose reaction pretty much sums up my thoughts as well as I can hope to. But I would still like to take a crack at it.

Polyamory has been having a kind of cultural moment lately, and I admit that my gut reaction has been consistently negative. The whole idea struck me as naïve and foolish—maybe even a bit sordid—and I resented even being made to think about the topic. But there was a corner of my brain that was unsatisfied with this reaction. After all, I studied anthropology in college, so I knew that lifelong monogamy was hardly a human universal. (Though, in fairness, I’m also unaware of any culture that practices the free love as described in this book.) In short, it seemed merely an irrational bias of mine to react so negatively, and I decided I ought not to bow to my biases.

There does seem to be a lot of confusion regarding sex lately. While tolerance of different sexualities is probably at an all-time high, sex itself seems to be on the decline. It is well known that the birthrate in the developed world has been on the wane for decades, and this isn’t due simply to widespread access to birth control. Young people actually seem to be doing less lovemaking, though nobody quite knows why. Added to this are disturbing trends like the rise of the woman-hating “incel” community, or the disheartening phenomenon of “trad wives.” One gets the impression that traditional modes of relating are breaking down, and nobody really knows what to do.

Consensual polyamory is one proposed solution that appears to be growing in popularity—or, at least, in visibility. It promises to be a sexuality for the future, free of shame, sexism, and possessiveness—a sexuality based on purely utilitarian grounds of harmless pleasure. (As a side note, it is curious that John Stuart Mill, the apostle of utilitarianism, was a devoted monogamist. Was he really promoting “the greatest good for the greatest number” by being loyal to his wife?!) However, the notion of free love is hardly new. This book was first published in 1997, and has a great many forbears—from Alfred Kinsey and Margaret Mead, all the way back to the Adamites.

In that spirit, I wanted to go through an exercise from an early chapter of this book, which advises us to think of examples of non-monogamous people we may know of. For me, the people who spring most readily to mind are Simone de Beauvoir and Jean-Paul Sartre, whose open relationship would certainly qualify as consensual polyamory today. And, if I’m not mistaken, Bertrand Russell was an advocate of free love, though I am not sure to what extent he practiced it (besides sleeping with T.S. Eliot’s wife). Martin Heidegger had an affair with Hannah Arendt, which would make him both a polyamorist and—as Arendt was Jewish and Heidegger a member of the Nazi party—an outrageous hypocrite.

Ironically, however, the book I most often think of in this connection is Will and Ariel Durant’s massive historical series, The Story of Civilization. Will and Ariel, for their part, were models of monogamy, having married when Will was 28 and Ariel just 15, and dying one week apart. Yet one of the main takeaways from their historical writings is that seemingly no one in history (besides them) was a faithful monogamist. Kings had their mistresses, artists their muses (and lovers), and writers their brothels. Even bishops and popes were known to breed discretely (thus cheating on God Himself). And though Durant treats these sexual connections as failings or missteps, the final impression is that one has got to be very tolerant indeed if one isn’t to condemn the entire human race.

The vast majority of this behavior is admittedly non-consensual, and thus ethically dubious to say the least. Yet considering its apparent ubiquity, one is tempted to make the same argument regarding polyamory as has been made with marijuana: If everyone is already doing it, and society isn’t crumbling, then why not just change the rules and allow it? Instead of building barriers to pleasure, why not just let it rip?

The main argument leveled against polyamory (besides religious ones, which don’t concern me) is jealousy: Namely, that it is a powerful, primitive, and uncontrollable emotion, dangerous to tamper with. Judging from the local news, sexual jealousy is among the most common motivations for murder. Besides that, jealousy is the machine that drives any number of classic stories, from Odysseus viciously murdering his wife’s suitors (and the maids they slept with), to Othello choking Desdemona over a handkerchief, to Madame Bovary’s and Anna Karenina’s tragic deaths for attempting to break free from the bonds of holy matrimony. I mean, for Pete’s sake, our entire foundational theory of psychology is, thanks to Freud, based on sexual jealousy.

Perhaps because of to this cultural inheritance, many of us—myself included—are apt to think of jealousy as an implacable force, deeply rooted in our biology, that we must bow to. However, the authors of this work contest this view in their chapter on jealousy, which for me was the heart of this book.

They make many interesting points. For one, considering jealousy as an unyielding fact of our nature is a kind of self-fulfilling prophecy. Lots of unpleasant things are deeply rooted in our collective psyche—envy, phobias, prejudices, violence—which we still try to combat. If we can do our best to overcome, say, fear of public speaking, why not try the same with jealousy?

What is more, despite just having one word for it, “jealousy” comprises several disparate things. It can involve many sorts of emotions—blinding rage, crippling anxiety, or just the sadness of loss—and include all sorts of thoughts, from blame to shame, not to mention all the religious and cultural baggage that comes along for the ride. This may seem like a banal point, but it at least allows you to get a hold of the sensation and examine its roots.

When you do, I suspect that you will find (as I do) that jealousy is a manifestation of anxiety regarding your own inadequacy—the fear of being found wanting in the most intimate sense. Such anxiety would seem obviously to be a “me problem.” The tricky thing about jealousy is that it encourages us to make it a “you problem”—to try to manage it by controlling other people. To use self-help speak, jealousy often involves a failure to “own your feelings,” putting yourself at the emotional mercy of somebody else rather than acknowledging that nobody but you can make you feel a certain way.

(There does seem to be some limits on the philosophy. If somebody stole my bicycle I would say that person was at least partially responsible for my feeling lousy.)

I admit that I found this view to be quite refreshing, since beforehand I was apt to think of jealousy as something unconquerable. It strikes me as far more productive to view it, instead, as just another one of the many emotional hang-ups we are prone to. And considering that jealousy can be an issue in even committed, monogamous relationships, I found the advice to be valuable indeed. I especially appreciated their realism. They don’t promise that we can achieve a Buddha-like detachment, immune from pangs of the heart. According to them, even “experienced sluts” occasionally suffer! All we can do is develop strategies to cope with it.

The rest of the book was surprisingly useful, too, even for prudes such as myself. Perhaps this should come as no surprise, as polyamorists almost by definition have the most experience dealing with relationships. Even when the information did not really apply to my situation, I found it to be of anthropological interest, as a window into another world. And while I’m not convinced that going to a sex-party is a “radical political act” (all the orgies in the world won’t stop the far-right!), I do think the authors’ sex-positive attitude is probably a lot healthier than how we often think about sex—as a commodity, a shameful secret, something to boast about, etc., etc.

So am I a convinced polyamorist? Unfortunately not. If there is one thing in which I vehemently disagree with the authors, it is their liking of complexity.

At various points, the authors describe in rapturous terms the forming of a sexual extended family, built up of present and former lovers into a “constellation.” Maybe this sounds appealing to some; but the thought of my ex-girlfriend going on a date with my current partner, who in turn call on an ex-boyfriend to look after their respective kids, while another ex takes a nap on the couch after making love to my roommate, who is also involved with both me and my partner—frankly, this sounds like a nightmare. The amount of time and energy it would take me to manage a single one of those relationships would utterly drain me. And the scope for drama is stupefying to contemplate.

I also don’t share the authors’ conviction that love is a boundless resource. Maybe some highly extroverted people may feel that they can fit any number of new people into their lives without having to boot out the old ones. But I know from experience that a few close friends, plus a romantic partner, is about as much as I can handle at any given moment. Love may not be limited, but time and energy certainly are; and true intimacy requires both.

But I don’t think this book can be fairly evaluated as an attempt to persuade people to be polyamorous. Rather, it is a how-to manual for those who are already on that path. And judged by that standard, I think the book could hardly be better.

View all my reviews

2023 in Books

2023 on Goodreads by Various

My rating: 5 of 5 stars

Though superficially this year has been a disappointing year in reading—I finished considerably fewer books, just over 60 rather than my typical 75 or more—this lack of quantity is largely illusory. A good number of the books I’ve finished this year have been quite long, many over 500 pages and a couple well over 1,000. So in terms of total pages read, I believe I am at par.

For whatever reason, I usually begin the year by getting extremely obsessed with a book. This year, it happened to be Why We Sleep by Matthew Walker, which convinced me that I was chronically under-rested and, thus, in danger of imminent death. For months afterwards, I dutifully tried different strategies for achieving optimal sleep—cutting down or (briefly) giving up caffeine, sleeping with a mask, going to bed earlier, drinking herbal tea, avoiding alcohol—and it did make a difference. However, probably the best thing I did for my sleep was simply to get a new job that didn’t require me to get up so early. Since then, I have mostly resorted to my old bad habits.

A few books I read this year required so much effort that they became little projects. This can certainly be said of my encounter with the Qur’an—a book difficult for a Westerner to appreciate, I think, though I did my best. I read a few other religious classics to complement my exploration of Islam—some Buddhist sutras and the Egyptian Book of the Dead—though none made nearly so deep an impression on me. Another project, offsetting my spiritual investigation, was my attempt to finally tackle two of the great works in the history of science: Faraday’s Experimental Researches in Electricity and Maxwell’s Treatise on Electricity and Magnetism. In both cases, I achieved only the most basic understanding of these great thinkers, though it was rewarding just the same.

I also finally started on two historical series that had long been on my list. The first is Winston Churchill’s account of World War II—deservedly a classic, and quite fun to read, despite its limitations. The other is Robert Caro’s magisterial biography of Lyndon Johnson, which deserves all the superlatives that can be heaped upon it. Both series, though in different ways, make the fine-grained texture of history more palpable, bringing the past alive with copious detail. I will add to this list, though it isn’t exactly a series, the two books by David Simon: Homicide and The Corner. Though Simon’s scope is smaller—the city of Baltimore rather than a president or a major historical event—he is just as good at revealing the inner workings of human life.

There are a few other smaller categories I should include. One is accounts of historical disasters. This describes John Hersey’s Hiroshima, Svetlana Alexievich’s Voices from Chernobyl, and Walter Lord’s A Night to Remember (about the sinking of the Titanic). Perhaps my morbid fascination with these events reveals something unsavory about my character, but I greatly enjoyed these books. Another category is America. Into this bin I would put William Least Heat-Moon’s famous travelog of the United States, Alan Taylor’s excellent history of the early American colonies, and Laurence Bergreen’s informative biography of Christopher Columbus. I am not sure I am feeling any more patriotic, though it is good to reconnect with one’s native land occasionally.

Last, I ought to mention fiction. This year has been, in retrospect, rather light on literature. True, I finally finished Les Miserables, which took months, and finally reread The Canterbury Tales. I also read the trifecta of great American plays: A Streetcar Named Desire, Death of a Salesman, and A Long Day’s Journey into Night—all deserved classics. But the books that stand out in my memory are The Things They Carried (an excellent anti-war book) and Sister Carrie (a devastating deconstruction of the American Dream). I also ought to mention having read my first P.G. Wodehouse and Agatha Christie, both superb in their respective fields.

My goals for 2024 are basically to keep going in the same direction: read a few more spiritual classics, some more influential works of science, continue reading Caro and Churchill, and tackle some rewarding works of literature. As usual, I must express my gratitude to everyone on this site. All of you help make reading a communal activity rather than a lonely endeavor. It is a continual pleasure.

View all my reviews

Review: The World as Will and Representation

The World as Will and Representation, Vol. 1 by Arthur Schopenhauer

My rating: 3 of 5 stars

To truth only a brief celebration of victory is allowed between the two long periods during which it is condemned as paradoxical, or disparaged as trivial.

Arthur Schopenhauer is possibly the Western philosopher most admired by non-philosophers. Revered by figures as diverse as Richard Wagner, Albert Einstein, and Jorge Luis Borges, Schopenhauer’s influence within philosophy has been comparatively muted. True, Nietzsche absorbed and then repudiated Schopenhauer, while Wittgenstein and Ryle took kernels of thought and elements of style from him. Compared with Hegel, however—whom Schopenhauer detested—his influence has been somewhat limited.

For my part, I came to Schopenhauer fully prepared to fall under his spell. He has much to recommend him. A cosmopolitan polyglot, a lover of art, and a writer of clear prose (at a time when obscurity was the norm), Schopenhauer certainly cuts a more dashing and likable figure than the lifeless, professorial, and opaque Hegel. But I must admit, from the very start, that I was fairly disappointed in this book. Before I criticize it, however, I should offer a little summary.

Schopenhauer published The World as Will and Representation when he was only thirty, and held fast to the views expressed in this book for the rest of his life. Indeed, when he finally published a second edition, in 1844, he decided to leave the original just as it was, only writing another, supplementary volume. He was not a man of tentative conclusions.

He was also not a man of humility. One quickly gets a taste for his flamboyant arrogance, as Schopenhauer demands that his reader read his book twice (I declined), as well as to read several other essays of his (I took a rain check), in order to fully understand his system. He also, for good measure, berates Euclid for being a bad mathematician, Newton for being a bad physicist, Winckelmann for being a bad art critic, and has nothing but contempt for Fichte, Schlegel, and Hegel. Kant, his intellectual hero, is more abused than praised. But Schopenhauer would not be a true philosopher if he did not believe that all of his predecessors were wrong, and himself wholly right—about everything.

The quickest way into Schopenhauer’s system is through Kant, which means a detour through Hume.

David Hume threw a monkey wrench into the gears of the knowledge process with his problems of causation and induction. In a nutshell, Hume demonstrated that it was illogical either to assert that A caused B, or to conclude that B always accompanies A. As you might imagine, this makes science rather difficult. Kant’s response to this problem was rather complex, but it depended upon his dividing the world into noumena and phenomena. Everything we see, hear, touch, taste, and smell is phenomena—the world as we know it. This world, Kant said, is fundamentally shaped by our perception of it. And—crucially—our perception imposes upon this observed world causal relationships.

This way, Hume’s problems are overcome. We are, indeed, justified in deducing that A caused B, or that B always accompanies A, since that is how our perception shapes our phenomenal world. But he pays a steep price for this victory over Hume. For the world of the noumena—the world in-itself, as it exists unperceived and unperceivable—is, indeed, a world where causal thinking does not apply. In fact, none of our concepts apply, not even space and time. The fundamental reality is, in a word, unknowable. By the very fact of perceiving the world, we distort it so completely that we can never achieve true knowledge.

Schopenhauer begins right at this point, with the division of the world into phenomena and noumena. Kant’s phenomena become Schopenhauer’s representation, with only minimal modifications. Kant’s noumena undergo a more notable transformation, and become Schopenhauer’s will. Schopenhauer points out that, if space and time do not exist for the noumena, then plurality must also not exist. In other words, fundamental reality must be single and indivisible. And though Schopenhauer agrees that observation can never reveal anything of significance about this fundamental reality, he believes that our own private experience can. And when we look inside, what we find is will: the urge to move, to act, and to live.

Reality, then, is fundamentally will—a kind of vital urge that springs up out of nothingness. The reality we perceive, the world of space, time, taste, and touch, is merely a kind of collective hallucination, with nothing to tell us about the truly real.

Whereas another philosopher could have turned this ontology into a kind of joyous vitalism, celebrating the primitive urge that animates us all, Schopenhauer arrives at the exact opposite conclusion. The will, for him, is not something to be celebrated, but defeated; for willing leads to desiring, and desiring leads to suffering. All joy, he argues, is merely the absence of suffering. We always want something, and our desires are painful to us. But satisfying desires provides only a momentary relief. After that instant of satiety, desire creeps back in a thousand different forms, to torture us. And even if we do, somehow, manage to satisfy all of our many desires, boredom sets in, and we are no happier.

Schopenhauer’s ethics and aesthetics spring from this predicament. The only escape is to stop desiring, and art is valuable insofar as it allows us to do this. Beauty operates, therefore, by preventing us from seeing the world in terms of our desires, and encouraging us to see it as a detached observer. When we see a real mountain, for example, we may bemoan the fact that we have to climb it; but when we see a painting of a craggy peak, we can simply admire it for what it is. Art, then, has a deep importance in Schopenhauer’s system, since it helps us towards the wisdom and enlightenment. Similarly, ethics consists in denying the will-to-live—in a nutshell, asceticism. The more one overcomes one’s desires, the happier one will be.

So much for the summary; on to evaluation.

To most modern readers, I suspect, Schopenhauer’s metaphysics will be the toughest pill to swallow. Granted, his argument that Kant should not have spoken of ‘noumena’ in the plural, but rather of a single unknowable reality, is reasonable; and if we are to equate that deeper reality with something, then I suppose ‘will’ will do. But this is all just a refinement of Kant’s basic metaphysical premises, which I personally do not accept.

Now, it is valid to note that our experience of reality is shaped and molded by our modes of perception and thought. It is also true that our subjective representation of reality is, in essence, fundamentally different from the reality that is being represented. But it strikes me as unwarranted to thus conclude that reality is therefore unknowable. Consider a digital camera that sprung to life. The camera reasons: “The image I see is a two-dimensional representation of a world of light, shape, and color. But this is just a consequence of my lens and software. Therefore, fundamental reality thus must not have any of those qualities—it has no dimensions, no light, no shape, and no color! And if I were to stop perceiving this visible world, the world would simply cease to exist, since it is only a representation.”

I hope you can see that this line of reasoning is not sound. While it is true that a camera only detects certain portions of reality, and that a photo of a mountain is a fundamentally different sort of thing than a real mountain, it is also true that cameras use real data from the outside world to create representations—useful, pleasing, and accurate—of that world. If this were not true, we would not buy cameras. And if our senses were not doing something similar, they would not help us to navigate the world. In other words, we can acknowledge that the subjective world of our experience is a kind of interpretive representation of the world-in-itself, without concluding that the world-in-itself has no qualities in common with the world of our representation. Besides, it does seem a violence done to language to insist that the world of our senses is somehow ‘unreal’ while some unknowable shadow realm is ‘really real.’ What is ‘reality’ if not what we can know and experience?

I also think that there are grave problems with Schopenhauer’s ethics, at least as he presents it here. Schopenhauer prizes the ascetics who try to conquer their own will-to-live. Such a person, he thinks, would necessarily be kind to others, since goodness consists in making less distinction between oneself and others. Thus, Schopenhauer’s virtue results from a kind of ego death. However, if all reality, including us, is fundamentally the will to live, what can be gained from fighting it? Some respite from misery, one supposes. But in that case, why not simply commit suicide? Schopenhauer argues that suicide does not overcome the will, but capitulates to it, since its an action that springs from the desire to be free from misery. Be that as it may, if there is no afterlife, and if life is only suffering punctuated by moments of relief, there does not seem to be a strong case against suicide. There is not even a strong case against murder, since a mass-murderer is arguably riding the world of more suffering than any sage ever could.

In short, it is difficult to have an ethics if one believes that life is necessarily miserable. But I would also like to criticize Schopenhauer’s argument about desires. It is true that some desires are experienced as painful, and their satisfaction is only a kind of relief. Reading the news is like that for me—mounting terror punctuated by sighs of relief. But this is certainly not true for all desires. Consider my desire for ice cream. There is absolutely nothing painful in it; indeed, I actually take pleasure in looking forward to eating the ice cream. The ice cream itself is not merely a relief but a positive joy, and afterwards I have feelings of delighted satisfaction. This is a silly example, but I think plenty of desires work this way—from seeing a loved one, to watching a good movie, to taking a trip. Indeed, I often find that I have just as much fun anticipating things as actually doing them.

The strongest part of Schopenhauer’s system, in my opinion, is his aesthetics. For I do think he captures something essential about art when he notes that art allows us to see the world as it is, as a detached observer, rather than through the windows of our desires. And I wholeheartedly agree with him when he notes that, when properly seen, anything can be beautiful. But, of course, I cannot agree with him that art merely provides moments of relief from an otherwise torturous life. I think it can be a positive joy.

As you can see, I found very little to agree with in these pages. But, of course, that is not all that unusual when reading a philosopher. Disagreement comes with the discipline. Still, I did think I was going to enjoy the book more. Schopenhauer has a reputation for being a strong writer, and indeed he is, especially compared to Kant or (have mercy!) Hegel. But his authorial personality—the defining spirit of his prose—is so misanthropic and narcissistic, so haughty and bitter, that it can be very difficult to enjoy. And even though Schopenhauer is not an obscure writer, I do think his writing has a kind of droning, disorganized quality that can make him hard to follow. His thoughts do not trail one another in a neat order, building arguments by series of logical steps, but flow in long paragraphs that bite off bits of the subject to chew on.

Despite all of my misgivings, however, I can pronounce Schopenhauer a bold and original thinker, who certainly made me think. For this reason, at least, I am happy to have read him.

View all my reviews

Review: We Were Eight Years in Power

We Were Eight Years in Power: An American Tragedy by Ta-Nehisi Coates

My rating: 4 of 5 stars

Racism was not a singular one-dimensional vector but a pandemic, afflicting black communities at every level, regardless of what rung they occupied.

Ta-Nehisi Coates has turned what could have been a routine re-publication of old essays into a genuine work of art.

The bulk of this book consists of eight essays, all published in The Atlantic, one per year of the Obama presidency. But Coates frames each one with a kind of autobiographical sketch of his life leading up to its writing. The result is, among other things, a surprisingly writerly book—and by that I mean a book written about writing—a kind of Bildungsroman of his literary life. Even on that narrow basis, alone, this book is absorbing, as it shows the struggles of a young writer to hone his craft and find his voice. And that voice is remarkable.

But this book is far more than that. Though the essays tackle diverse topics—Bill Cosby, the Civil War, Michelle Obama, mass incarceration—they successfully build upon one another into a single argument. The kernel of this argument is expressed in the finest and most famous essay in this collection, “The Case for Reparations”: namely, that America must reckon with its racist past honestly and directly if we are ever to overcome white supremacy. Much of the other essays are dedicated to criticizing two principal rivals to this strategy: Respectability Politics, and Class-Based Politics.

First, Respectability Politics. This is the notion—popular at least since the time of Booker T. Washington—that if African Americans work hard, strive for an education, and adhere to middle-class norms, then racism will disappear. Though sympathetic to the notion of black self-reliance, Coates is basically critical of this strategy—first, because he believes it has not and will never work; and second, because it is deeply unjust to ask a disenfranchised people to earn their own enfranchisement.

His portraits of the Obamas—both Barack and Michelle—are fascinating for Coates’s ambivalence towards their use of respectability politics. Coates seems nearly in awe of the Obamas’ ability to be simultaneously black and American, and especially of Barack Obama’s power to communicate with equal confidence to the black and white communities. And he is very sympathetic to the plight of a black president, since, as Coates argues, Obama’s ability to take a strong stance regarding race was heavily constrained by white backlash. Coates is, however, consistently critical of Obama’s rhetorical emphasis on hard work and personal responsibility (such as his many lectures about black fatherhood), rather than the historical crimes perpetrated against the black community.

Coates’s other target is the left-wing strategy of substituting class for race—that we ought to help the poor and the working class generally, and in so doing we will disproportionately benefit African Americans. The selling point of this strategy is that, by focusing on shared economic hardships, the left will be able to build a broader coalition without inflaming racial tensions. But Coates is critical of this approach as well. For one, he thinks that racial tension runs far more deeply than class tension, so that this strategy is unlikely to work. What is more, for Coates, this is a kind of evasion—an attempt to sidestep the fundamental problem—and therefore cannot rectify the crime of racism.

The picture that emerges from Coates’s book is rather bleak. If the situation cannot be improved through black advancement or through general economic aid, then what can be done? The only policy recommendation Coates puts forward is Reparations—money distributed to the black community, as a way of compensating for the many ways it has been exploited and disenfranchised. But if I understand Coates correctly, it is not that he believes this money itself would totally solve the problem; it is that such a program would force us to confront the problem of racism head-on, and to collectively own up to the truth of the matter. Virtually nobody—Coates included—thinks that such a program, or such a reckoning, will happen anytime soon, which leaves us in an uncomfortably hopeless situation.

The easy criticism to make of Coates is that his worldview is simplistic, as he insists on reducing all of America’s sins to anti-black racism. But I do not think that this is quite fair. Coates does not deny that, say, economic inequality or sexism are problems; indeed, he notes that these sorts of problems all feed into one another. Furthermore, Coates reminds us that racism is rarely as simple as a rude remark or an insult; rather, it is as complex, diffuse, and widespread as an endemic disease. Coates’s essential point, then, is that racism runs far more deeply and strongly in American life than we are ready to acknowledge—mainly, because persistent racism undermines most of our comfortable narratives or even our policy ideas, not to mention our self-image.

For a brief moment, after Obama’s election, we dreamed of a post-racial America. But, as Coates shows, in the end, Obama’s presidency illustrated our limitations as much as our progress. This was apparent in the sharp drop in Obama’s approval ratings after he criticized a police officer for arresting a black college professor outside of his own house. This was shown, more dramatically, in the persistent rumors that Obama was a Muslim, and of course in Trump’s bigoted birtherism campaign. And this was shown, most starkly, by the fact that Barack Obama—a black man entirely free of scandals, of sterling qualifications, fierce intelligence, and remarkable rhetorical gifts —was followed by Donald Trump—a white man with no experience, thoughtless speech, infinite scandals, and who is quite palpably racist.

As so many people have noted, it is impossible to imagine a black man with Trump’s resumé of scandals, lack of experience, or blunt speaking style approaching the presidency. Even if we focus on one of Trump’s most minor scandals, such as his posing with Goya products after the CEO praised Trump’s leadership, we can see the difference. Imagine the endless fury that Obama would have faced—and not only from the Republican Party—had he endorsed a supporter’s product from the Resolute Desk of the Oval Office! Indeed, as Coates notes, Trump’s ascension is the ultimate rebuke to Respectability Politics: “Barack Obama delivered to black people the hoary message that in working twice as hard as white people, anything is possible. But Trump’s counter is persuasive—work half as hard as black people and even more is possible.”

Whether Obama’s optimism or Coates’s pessimism will be borne out by the country’s future, I do think that Coates makes an essential point: that racism is deeply rooted in the country, and will not simply disappear as African Americans become less impoverished or more ‘respectable.’ Communities across America remain starkly segregated; incarceration rates are high and disproportional; the income, unemployment, and wealth gaps are deep and persistent; and we can see the evidence of all of these structural inequalities in the elevated mortality suffered by the black community during this pandemic. The intractability of this problem is bleak to contemplate, but an important one to grapple with. And it helps that this message is delivered in some of the finest prose by any contemporary writer.

View all my reviews

Review: Capital in the Twenty-First Century

Capital in the Twenty-First Century by Thomas Piketty

My rating: 4 of 5 stars

In any case, truly democratic debate cannot proceed without reliable statistics.

For such a hefty book, so full of charts and case studies, the contents of Capital in the Twenty-First Century can be summarized with surprising brevity. Here it goes:

For as long as we have reliable records, market economies have produced huge disparities in income and wealth. The simple reason for this is that private wealth has consistently grown several times faster than the economy; furthermore, the bigger your fortune, the faster it grows. As a result, in the 18th and 19th centuries, inequality was high and consistently grew: most of the population owned nothing or close to nothing, and a small propertied class accumulated vast generational wealth. This trend was only reversed by the cataclysms of the 20th century—the World Wars and Great Depression—as well as the resultant government policies, such as social security and progressive taxes. But all signs indicate that the general pattern is re-emerging, and we are reverting to levels of inequality not seen since the Belle Epoque.

This is a relatively simple story, but Piketty goes to great lengths to prove it. Indeed, the value of this book consists in its wealth of data rather than any seismic theoretical insights. Piketty is an artist on graph paper; and with a few simple dots and lines he cuts to the heart of the matter. The large time scales help us to see things invisible in the present moment. For example, while some economists have thought that the ratio of income going to labor and capital was quite stable, Piketty shows that it fluctuates through time and space. Seen in this way, the economy ceases to be a static entity following fixed rules, but something all too human—responding to government policy, cultural developments, and historical accidents.

Given the title of this book, comparisons to Marx are inevitable. Piketty himself begins with a short historical overview of the thinkers he considers his predecessors, with Marx given due credit. Piketty even concurs with the basic Marxian logic—that capitalism inevitably leads to a crisis of accumulation, with the capitalists siphoning off more and more resources until none are left for the rest of the population (with revolution inevitable). If we have avoided such a crisis, says Piketty, it is because Marx based his analysis on a static economy, not a growing one. The twentieth century was exceptional, not only because of its many calamities, but also because it saw enormous rates of economic growth, which also helped to offset the basic logic of capitalist accumulation. Growth rates have significantly slowed in this century.

In summarizing Piketty this way, I fear that I am not doing justice to his appeal. This book is at its most enjoyable when Piketty is at his most empirical—when he is taking the reader through historical trends and case studies. This is extremely refreshing in an economist. I often complain that economics, as a discipline, is needlessly theoretical, getting lost in abstruse debates about how certain variables affect one another, rather than focusing on observable data. Piketty explicitly rejects this style of economics, and puts forward his own method of historical research. This has many advantages. For one, it makes the book far easier to understand, since Piketty’s point is always grounded in a set of facts. What is more, this way of doing economics can be integrated with other disciplines, like history or sociology, rather than existing apart in its own theoretical realm. For example, Piketty often has occasion to bring up the novels of Jane Austen and Honoré de Balzac to illustrate his points.

Well, if Piketty is even approximately correct, then we are left in a rather uncomfortable situation. As Piketty repeatedly notes, vast inequality leads to political instability and the undermining of democratic government, as the super-wealthy are able to accumulate ever-larger influence. So what should we do about it? Here, the difference in temperament between Marx and Piketty is especially apparent. Instead of advocating for any kind of revolution, Piketty puts forward the decidedly wonkish solution of a global tax on wealth. The proposed tax would be progressive (only significantly taxing large fortunes) and would extend throughout the world, so that the rich could not simply hide their money in tax havens. Yet as Piketty himself notes, this solution is nearly as utopian as the Proletariat Revolution, so I am not sure where that leaves us.

As much as I enjoyed and appreciated this book, nowadays it is somewhat difficult to see why it became so wildly popular and influential. This had more to do with the historical moment in which it was published than the book itself, I suspect. The world was still reeling from the shock of the 2008 crash, and the public was just coming to grips with the scale and ramifications of inequality. Specifically, while most people tend to think of inequality in terms of income—partly, because most people do not have much wealth to speak of—Piketty’s emphasis on wealth, specifically generational wealth, added another dimension to the debate. Piketty evidently succeeded in getting his message across, since I did not find anything in this book shocking. Now we all know about inequality.

While I am no economist, it does seem clear to me that Piketty’s argument has several weak points. For one, his narrow focus on income and wealth distributions lead him to ignore other important factors—most notably, for me, unemployment rates. Further, the major inequality that Piketty identifies—that wealth grows faster than the economy, or r > g—could have used more theoretical elaboration. I wonder: How can private wealth grow so much faster than the economy, if it is a major component of the economy? At one point, Piketty argues that the super rich can afford to hire the best investors and financial consultants; but from what I understand, professional investors do not, on the whole, outperform index funds (which grow along with the economy). Clearly, there is much for professional economists to argue about here.

Whatever its flaws, Capital in the Twenty-First Century is an ambitious and compelling book that made a lasting and valuable contribution to political debate. Piketty may be no Marx; but for a man who loves charts and graphs, he is oddly compelling.

View all my reviews